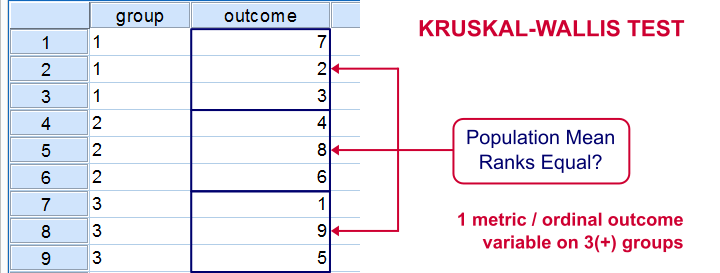

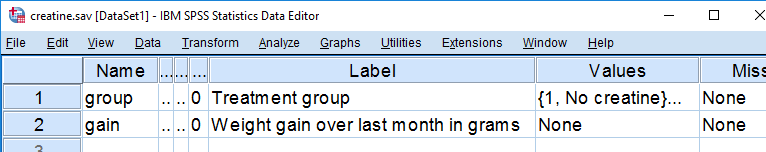

The Kruskal-Wallis test is an alternative for a one-way ANOVA if the assumptions of the latter are violated. We'll show in a minute why that's the case with creatine.sav, the data we'll use in this tutorial. But let's first take a quick look at what's in the data anyway.

Quick Data Description

Our data contain the result of a small experiment regarding creatine, a supplement that's popular among body builders. These were divided into 3 groups: some didn't take any creatine, others took it in the morning and still others took it in the evening. After doing so for a month, their weight gains were measured. The basic research question is

does the average weight gain depend on

the creatine condition to which people were assigned?

That is, we'll test if three means -each calculated on a different group of people- are equal. The most likely test for this scenario is a one-way ANOVA but using it requires some assumptions. Some basic checks will tell us that these assumptions aren't satisfied by our data at hand.

Data Check 1 - Histogram

A very efficient data check is to run histograms on all metric variables. The fastest way for doing so is by running the syntax below.

frequencies gain

/formats notable

/histogram.

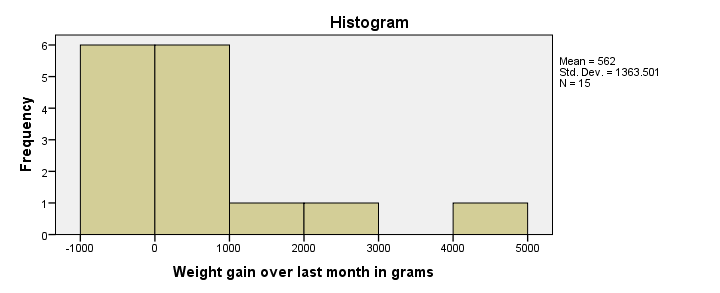

Histogram Result

First, our histogram looks plausible with all weight gains between -1 and +5 kilos, which are reasonable outcomes over one month. However, our outcome variable is not normally distributed as required for ANOVA. This isn't an issue for larger sample sizes of, say, at least 30 people in each group. The reason for this is the central limit theorem. It basically states that for reasonable sample sizes the sampling distribution for means and sums are always normally distributed regardless of a variable’s original distribution. However, for our tiny sample at hand, this does pose a real problem.

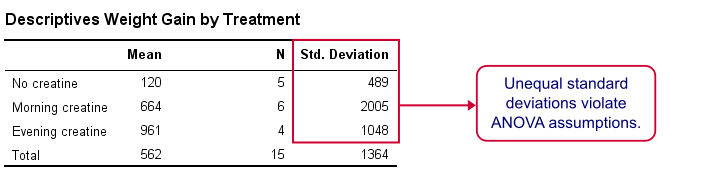

Data Check 2 - Descriptives per Group

Right, now after making sure the results for weight gain look credible, let's see if our 3 groups actually have different means. The fastest way to do so is a simple MEANS command as shown below.

means gain by group.

SPSS MEANS Output

First, note that our evening creatine group (4 participants) gained an average of 961 grams as opposed to 120 grams for “no creatine”. This suggests that creatine does make a real difference.

But don't overlook the standard deviations for our groups: they are very different but ANOVA requires them to be equal.The assumption of equal population standard deviations for all groups is known as homoscedasticity. This is a second violation of the ANOVA assumptions.

Kruskal-Wallis Test

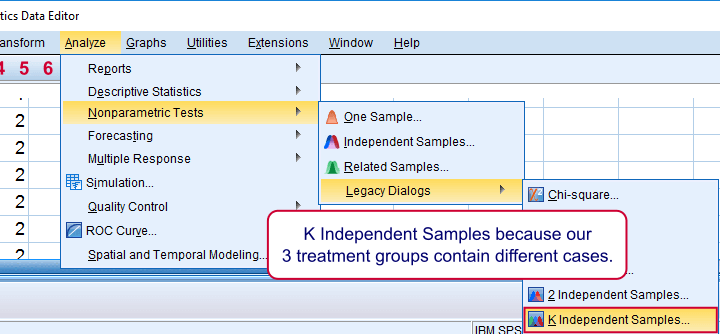

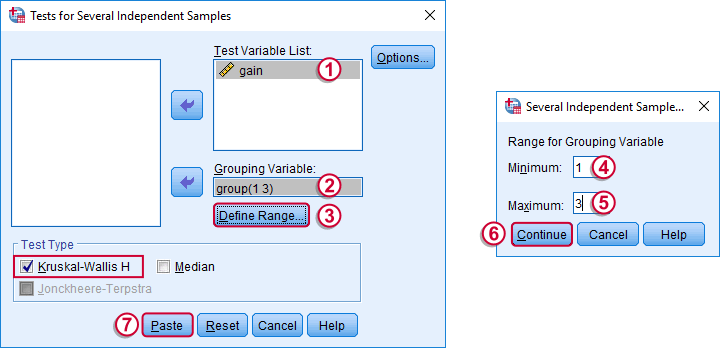

So what should we do now? We'd like to use an ANOVA but our data seriously violates its assumptions. Well, a test that was designed for precisely this situation is the Kruskal-Wallis test which doesn't require these assumptions. It basically replaces the weight gain scores with their rank numbers and tests whether these are equal over groups. We'll run it by following the screenshots below.

Running a Kruskal-Wallis Test in SPSS

We use if we compare 3 or more groups of cases. They are “independent” because our groups don't overlap (each case belongs to only one creatine condition).

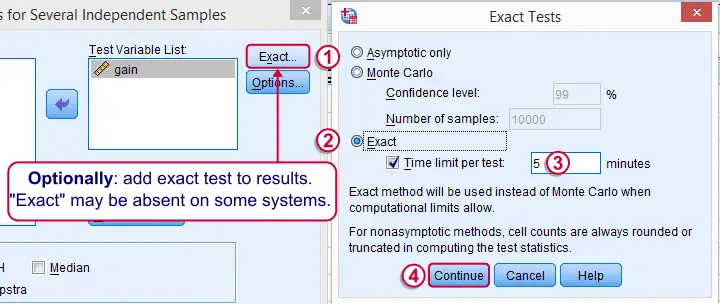

Depending on your license, your SPSS version may or may have the option shown below. It's fine to skip this step otherwise.

SPSS Kruskal-Wallis Test Syntax

Following the previous screenshots results in the syntax below. We'll run it and explain the output.

NPAR TESTS

/K-W=gain BY group(1 3)

/MISSING ANALYSIS.

SPSS Kruskal-Wallis Test Output

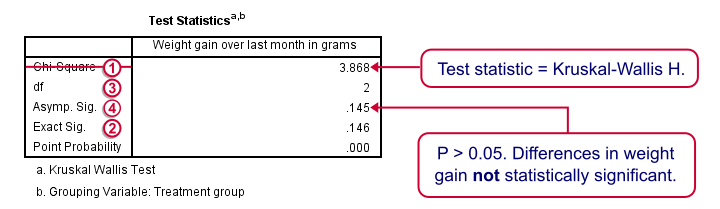

We'll skip the “RANKS” table and head over to the “Test Statistics” shown below.

Our test statistic -incorrectly labeled as “Chi-Square” by SPSS- is known as Kruskal-Wallis H. A larger value indicates larger differences between the groups we're comparing. For our data it's roughly 3.87. We need to know its sampling distribution for evaluating whether this is unusually large.

Our test statistic -incorrectly labeled as “Chi-Square” by SPSS- is known as Kruskal-Wallis H. A larger value indicates larger differences between the groups we're comparing. For our data it's roughly 3.87. We need to know its sampling distribution for evaluating whether this is unusually large.

Exact Sig. uses the exact (but very complex) sampling distribution of H. However, it turns out that if each group contains 4 or more cases, this exact sampling distribution is almost identical to the (much simpler) chi-square distribution.

Exact Sig. uses the exact (but very complex) sampling distribution of H. However, it turns out that if each group contains 4 or more cases, this exact sampling distribution is almost identical to the (much simpler) chi-square distribution.

We therefore usually approximate the p-value with a chi-square distribution. If we compare k groups, we have k - 1 degrees of freedom, denoted by df in our output.

We therefore usually approximate the p-value with a chi-square distribution. If we compare k groups, we have k - 1 degrees of freedom, denoted by df in our output.

Asymp. Sig. is the p-value based on our chi-square approximation. The value of 0.145 basically means there's a 14.5% chance of finding our sample results if creatine doesn't have any effect in the population at large. So if creatine does nothing whatsoever, we have a fair (14.5%) chance of finding such minor weight gain differences just because of random sampling. If p > 0.05, we usually conclude that our differences are not statistically significant.

Asymp. Sig. is the p-value based on our chi-square approximation. The value of 0.145 basically means there's a 14.5% chance of finding our sample results if creatine doesn't have any effect in the population at large. So if creatine does nothing whatsoever, we have a fair (14.5%) chance of finding such minor weight gain differences just because of random sampling. If p > 0.05, we usually conclude that our differences are not statistically significant.

Note that our exact p-value is 0.146 whereas the approximate p-value is 0.145. This supports the claim that H is almost perfectly chi-square distributed.

Kruskal-Wallis Test - Reporting

The official way for reporting our test results includes our chi-square value, df and p as in

“this study did not demonstrate any effect from creatine,

H(2) = 3.87, p = 0.15.”

So that's it for now. I hope you found this tutorial helpful. Please let me know by leaving a comment below. Thanks!

SPSS TUTORIALS

SPSS TUTORIALS

THIS TUTORIAL HAS 72 COMMENTS:

By Ruben Geert van den Berg on May 24th, 2017

Hi Hassan!

Thanks for your comment. Perhaps first consult Simple Overview Statistical Comparison Tests.

From what you write, it's hard to tell which test is appropriate but perhaps a Wilcoxon signed-ranks test or even a paired samples t-test may be appropriate.

It's not always a black-or-white question. Sometimes several tests may be appropriate for a single question.

Hope that helps!

Ruben

By frank nukunu on June 21st, 2017

Very helpful. thanks

By Eyu Chan Hong on July 17th, 2017

Hi, may I know is there any post hoc test on this? any links?

I am using SPSS version 24.

Some said it comes with post hoc but I don't see there is a part of it after running the test.

or I just conclude the significance based on Asymp. Sig?

By Ruben Geert van den Berg on July 18th, 2017

Hi Eyu!

There's no "official" post hoc tests for Kruskal-Wallis. You've 3 basics options here:

1 - Skip them altogether -I tend towards doing so myself since the main test already established that differences between groups are not just mere sampling fluctuation.

2 - Run Mann-Whitney tests on each pair of groups and apply a Bonferroni correction on the p-values. You may lose quite some power if there's many (5 or more) groups involved.

3 - Andy Field describes a step down procedure in which you run subsequent Kruskal-Wallis tests on different subsets of groups.

Options 2 and 3 are included in SPSS if you use the new nonparametric tests dialog.

Hope that helps!

By John on August 24th, 2017

Hi Ruben,

I am running a K-W in SPSS 24 to analyze a dependent variable (biomarker of pollutant exposure) by an independent variable with 3 factors (sites).

I am using K-W because of violations of normality and equality of variance.

The K-W is significant and I want to run a post hoc test. I saw that there is no "official" post hoc test, but it would help me drive home my results/discussion to categorize these sites as more or less polluted.

1. In the "new dialogue" of SPSS 24 under "Choose Test" I am unsure whether to use a "Stepwise step-down" or "All pairwise" multiple comparison.

This is not as important because whichever one I choose the test is still significant, but I don't understand what should be used when and why. Also, I am more inclined to use the pairwise option so that I can categorize the sites by homogeneous subsets on a figure.

2. Under "Test Options" I am unsure whether to "Exclude Cases" test-by-test or listwise.

This is important because if I use one, then I have a different outcome for both the "stepwise step-down" and "all pairwise" post hoc tests.

3. From what I have read it seems that one of these scenarios is the Dunn-Bonferroni post hoc test. I am not sure if that is the one I want to use or what the names of the other tests are.

Thank you,

John